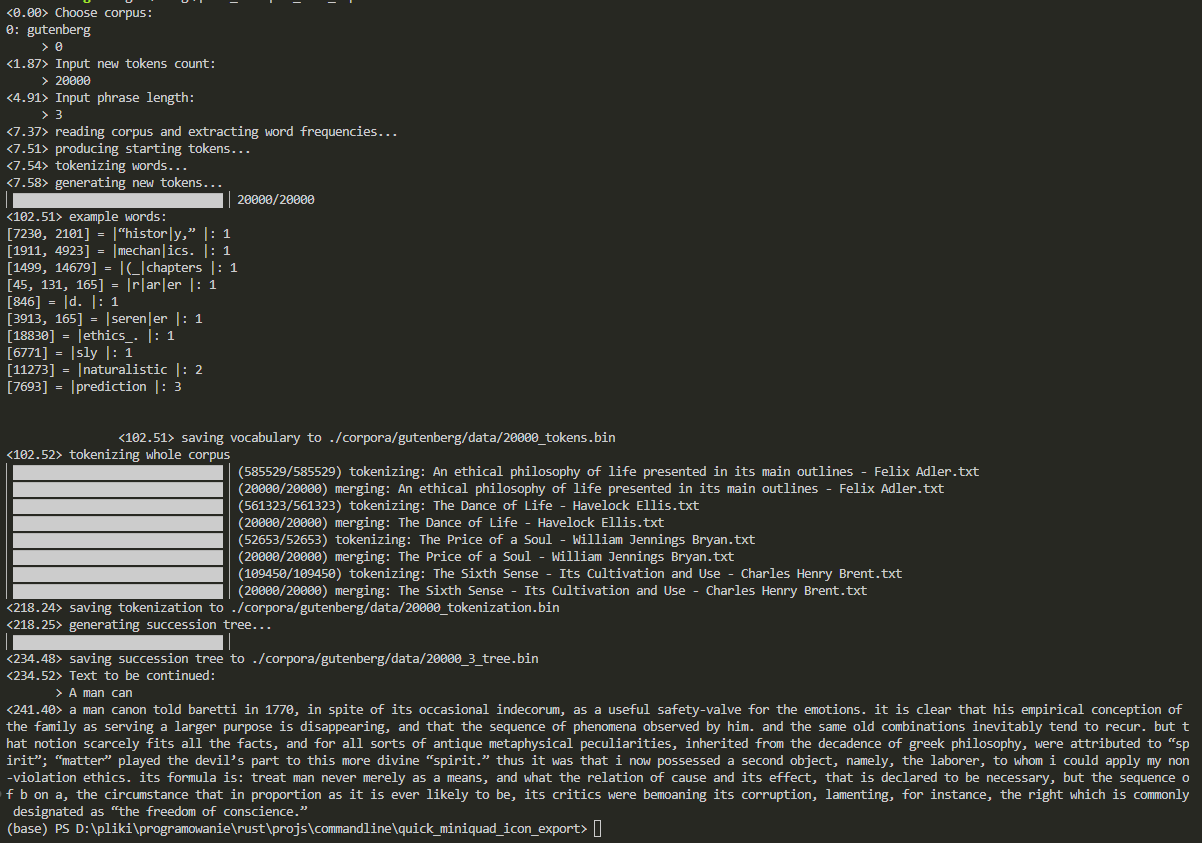

This is my take at LLMs (although it turned out to be a Little Languege Model). It reads a corpus of text, analyzes the frequencies of sequences of letters and tokenizes the whole corpus. It then generates a succession tree - a tree representing the sequences of tokens in the corpus, together with their frequencies. The program can then use the tree to generate new text, which is similar to the original corpus.